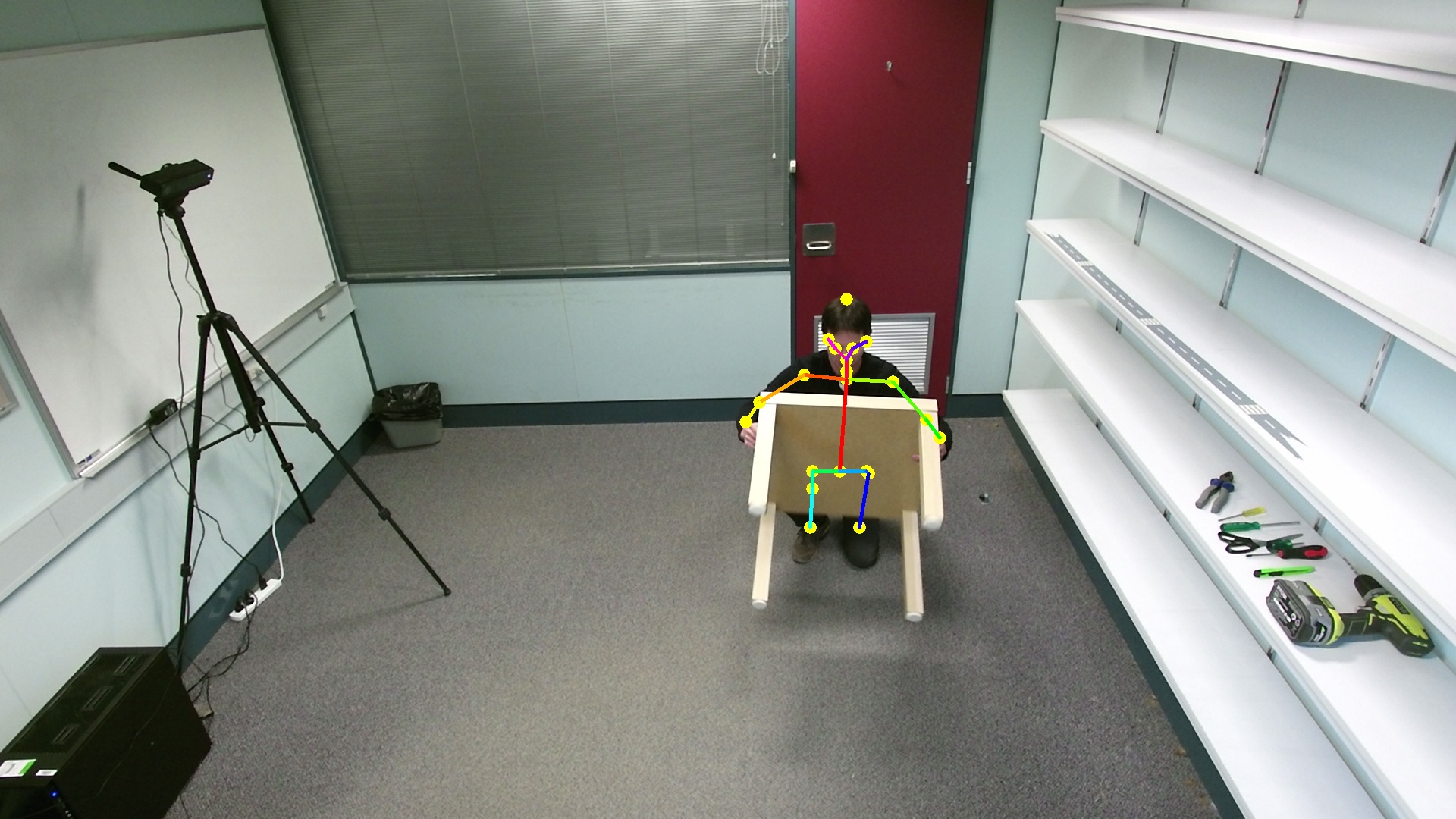

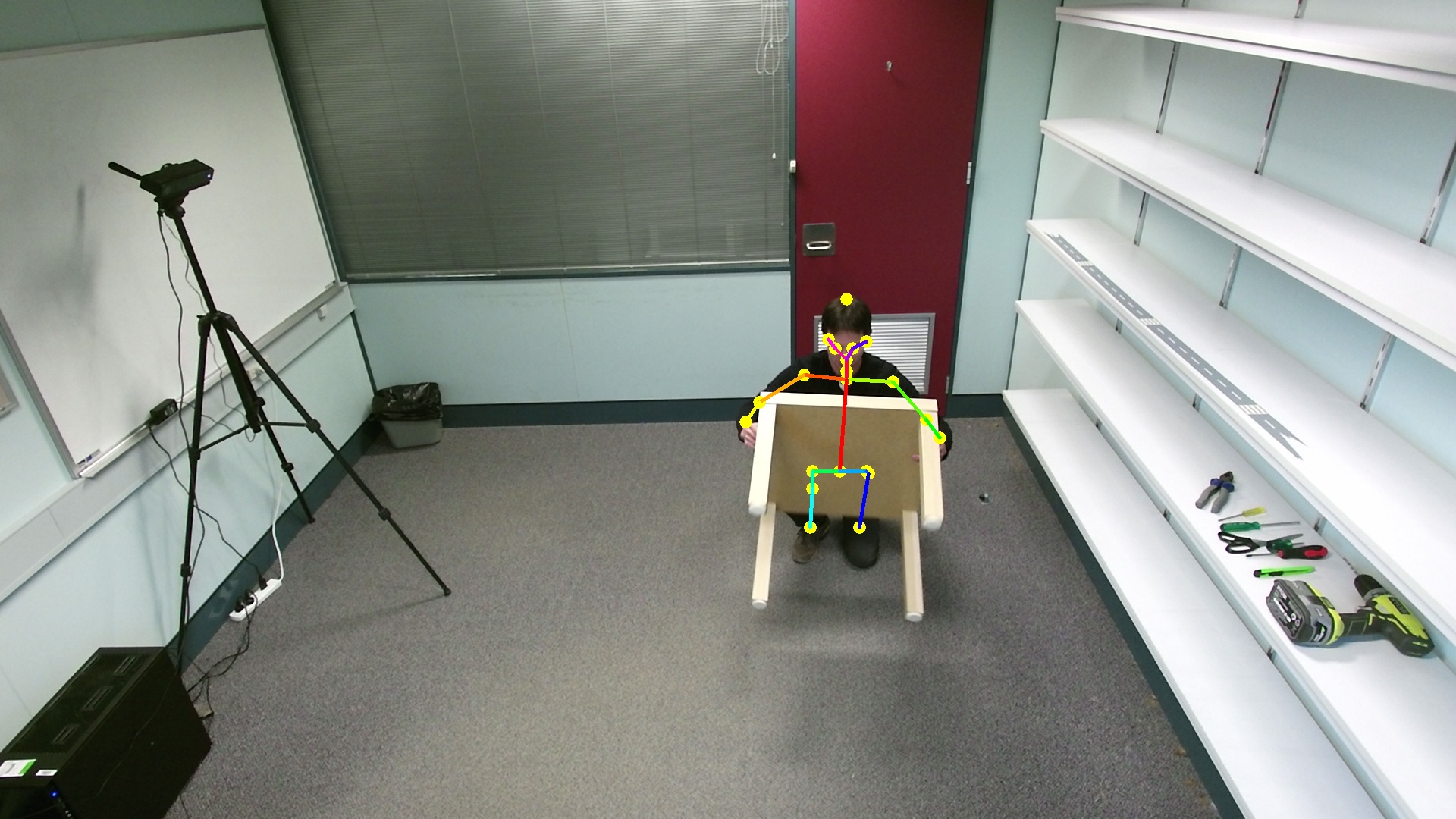

Human pose

Object segmentation and tracking

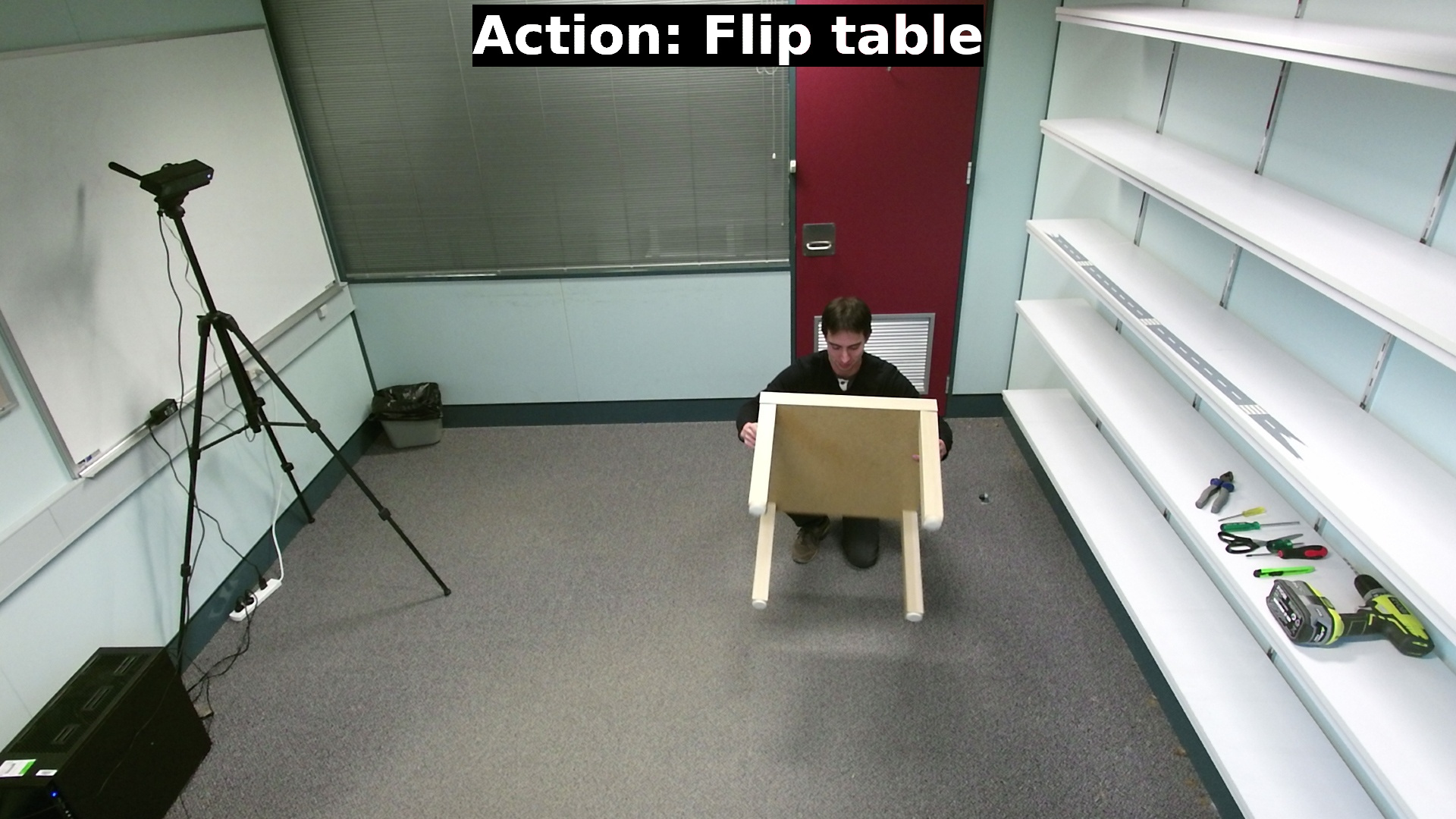

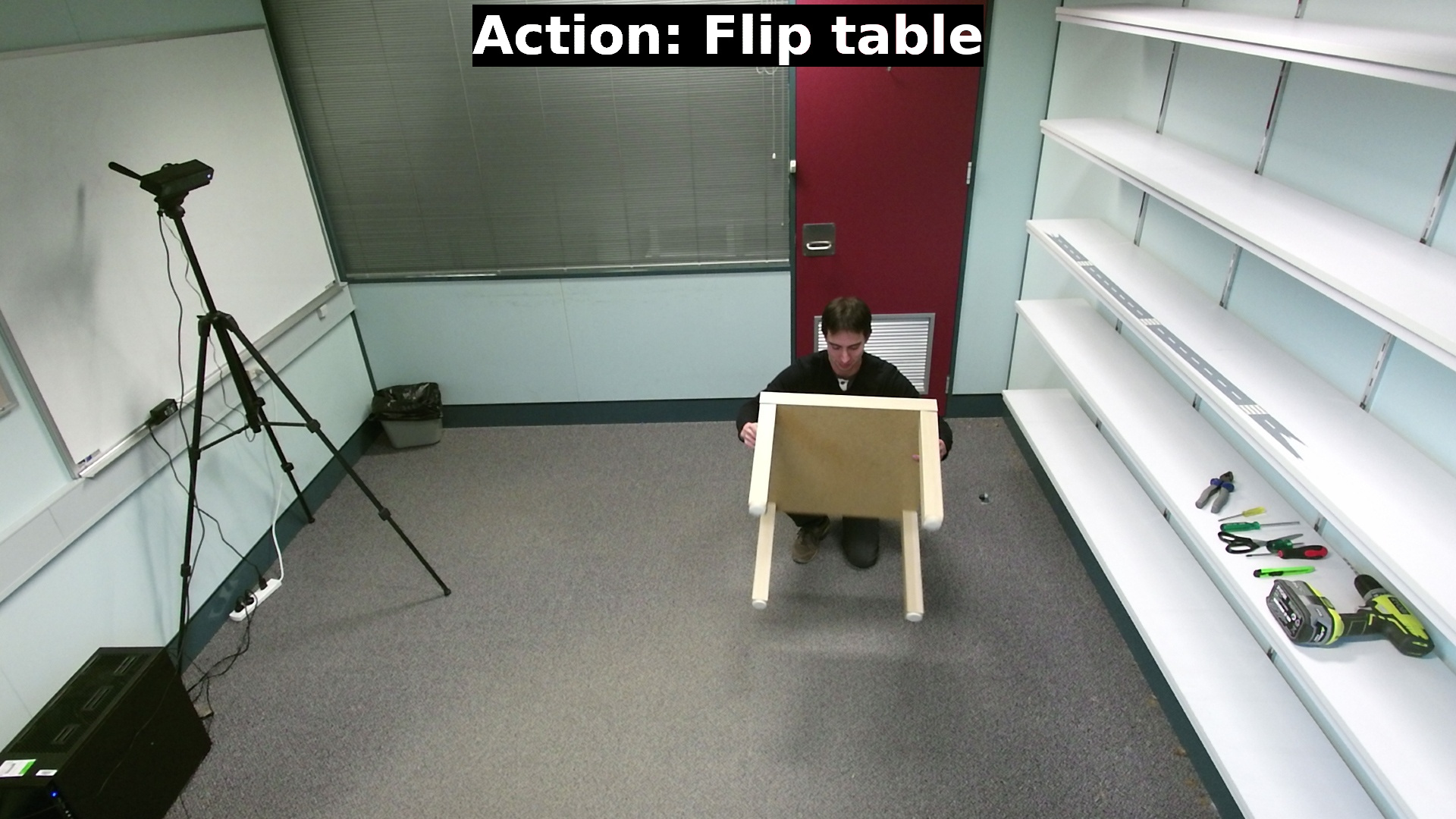

Atomic actions

YIzhak Ben-Shabat, Xin Yu, Fatemeh Saleh, Dylan Campbell, Cristian Rodriguez, Hongdong Li, Stephen Gould The Australian National University (ANU) Australian Centre for Robotic Vision (ACRV)

What is IKEA ASM dataset? The IKEA ASM dataset is a multi-modal and multi-view video dataset of assembly tasks to enable rich analysis and understanding of human activities. It contains 371 samples of furniture assemblies and their ground-truth annotations. Each sample includes 3 RGB views, one depth stream, atomic actions, human poses, object segments, object tracking, and extrinsic camera calibration. Additionally, we provide code for data processing, including depth to point cloud conversion, surface normal estimation, visualization, and evaluation in a designated github repository. More information can be found in the paper .

Characteristics

Human pose

Object segmentation and tracking

Atomic actions

If you use the IKEA assembly data or code please cite:

@article{title={The IKEA ASM Dataset: Understanding People Assembling Furniture through Actions, Objects and Pose},

author={Ben-Shabat, Yizhak and Yu, Xin and Saleh, Fatemehsadat and Campbell, Dylan and Rodriguez-Opazo, Cristian and Li, Hongdong and Gould, Stephen},

booktitle = {arXiv preprint arXiv:2007.00394},

year = {2020}}

The dataset and benchmarks provided on this page are published under the Creative Commons Attribution-NonCommercial 4.0 International License . This means you can use it for research and educational purposes but you must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use. We make no representations or warranties. You may not use the material for commercial purposes, for commercial licensing please contact us at anu.ikea.dataset[at]gmail.com. The code is provided under the MIT license.

The dataset is available for download as zipped video files in a shared Google Drive folder.

Use the code in our github repository to train and test your models...

This work was supported by the Australian Centre for Robotic Vision (ACRV). We would like to also thank Dr. Lars Petersson from Data61/CSIRO for helpful discussions and for providing additional compute resources for this project. For the full list of contributors click on the "Thank you!" button.

Thank you!